Artificial Intelligence (AI) is transforming the way we experience digital content, and one of the most exciting advancements in recent years is AI-powered lip sync technology. Whether in movies, video games, virtual assistants, or augmented reality (AR), AI-driven lip sync systems are enhancing the realism of digital characters and avatars, making them speak and react as naturally as humans. By seamlessly synchronizing spoken words with lip movements, AI lip sync is reshaping how we interact with digital media and redefining the boundaries of entertainment and communication.

What is ai lip sync?

AI lip sync refers to the use of artificial intelligence to create a synchronization between spoken words and lip movements in digital environments. Traditional lip-sync techniques required extensive manual animation, where animators would meticulously match a character’s speech with mouth movements. AI lip sync takes this process to the next level by automating it, allowing characters in animations, games, or even virtual assistants to speak in sync with the audio they are producing. The result is a more lifelike, immersive, and realistic digital interaction.

The primary goal of AI lip sync technology is to match the audio (the speech) with the correct facial movements, particularly the mouth and lips, ensuring that the character’s speech appears as natural as possible.

How AI Lip Sync Works

The process of AI lip sync typically involves several core steps:

1. Speech Recognition and Phoneme Detection

The AI system first listens to the audio input (spoken language) and processes it through speech recognition algorithms. These algorithms break the speech down into phonemes, which are the smallest units of sound in a language. Each phoneme represents a specific sound, such as the "b" sound in "bat" or the "sh" sound in "shark."

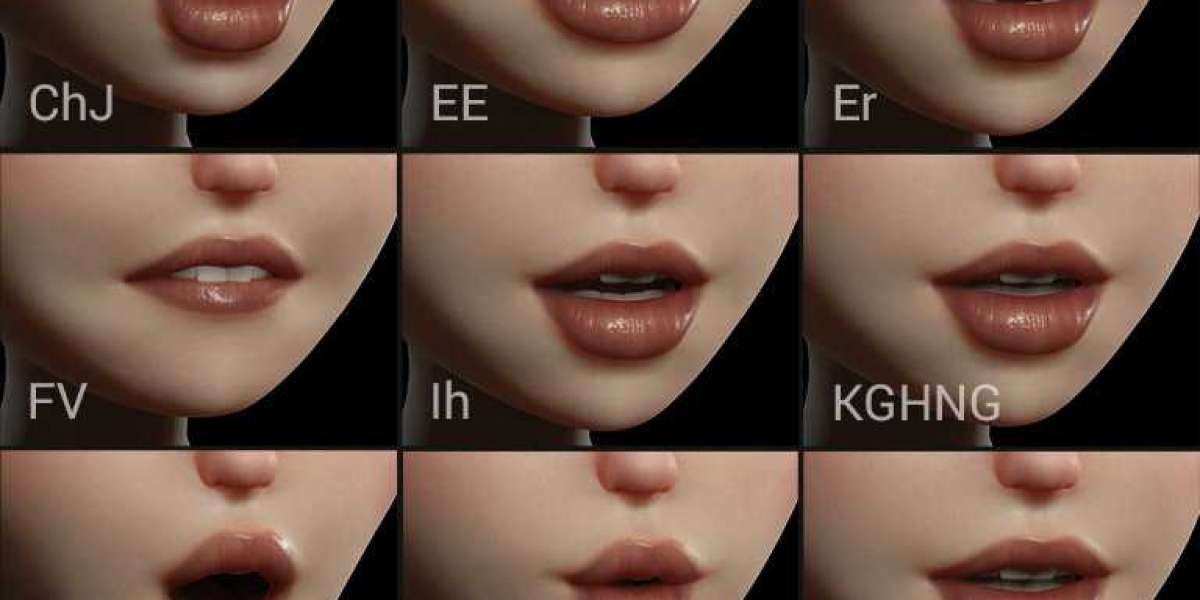

2. Mapping Phonemes to Visemes

Once the phonemes are identified, the AI uses a viseme database. Visemes are visual representations of phonemes, or in simpler terms, the mouth shapes corresponding to particular sounds. For example, the sound “b” might be associated with the lips coming together, while the sound “f” would involve the teeth and lips coming into contact. The AI maps the detected phonemes to the appropriate mouth shapes, ensuring that the character’s mouth movements align with the audio.

3. Facial Feature Tracking

Using computer vision technology, the system tracks key features of the character’s face, such as the lips, jaw, and eyes, to ensure that the mouth movements and facial expressions are not only synchronized with speech but also realistic. This step can include adjustments for other features like eyebrow movements or eye contact to make the character appear more emotionally engaging.

4. Temporal Synchronization

Finally, the AI adjusts the timing of the lip movements to ensure they align precisely with the spoken words. This is crucial because lip movements must occur at the right moments to make the speech appear synchronized and natural. For example, when a character says “hello,” the AI must ensure the character’s mouth opens and closes at the correct times to match the speech.

5. Rendering the Animation

After the AI completes these steps, the synchronized lip movements are rendered on the character. This can be done in real-time, particularly in interactive environments like video games or virtual assistants, or in pre-rendered media like animated films.

Applications of AI Lip Sync

1. Entertainment and Animation

AI lip sync is making a significant impact on animated films, television shows, and video games. Traditionally, animators had to painstakingly match lip movements to pre-recorded voice acting, but with AI, this process is automated, allowing for more efficient production. Moreover, AI lip sync makes localization easier. When a film is dubbed into a foreign language, AI can ensure that the characters’ lips match the new audio track, which has always been a challenge in global media distribution.

2. Video Games

In the gaming industry, AI lip sync plays a crucial role in creating immersive gameplay experiences. In open-world games and RPGs, where players interact with NPCs (non-playable characters), realistic conversations are essential. AI ensures that NPCs' speech matches their lip movements in real time, enhancing the feeling of interaction and immersion. This is especially important in narrative-driven games, where the relationship between the player and in-game characters is vital.

3. Virtual Assistants and Customer Service

AI lip sync is transforming the way we interact with virtual assistants like Siri, Alexa, and Google Assistant. Instead of hearing a voice with no visible source, users can interact with digital avatars or virtual assistants whose lips move in sync with their responses, creating a more engaging and natural user experience. This can make conversations feel more personal and less robotic.

4. Educational Tools

In language learning applications, AI lip sync is helping students learn how to pronounce words by providing accurate visual feedback. The AI can generate mouth shapes and provide real-time guidance, helping learners to replicate the correct pronunciation by observing the lip movements of the AI-generated character.

5. Social Media and Content Creation

AI lip sync is also making waves in the world of content creation. Influencers, artists, and videographers are using AI to create animated avatars or digital personas that lip-sync perfectly to their voiceovers. This can help content creators produce more engaging and visually appealing videos. Additionally, AI-generated lip sync makes it easier for creators to produce content in multiple languages without re-recording the dialogue.

Challenges and Limitations of AI Lip Sync

Despite its impressive capabilities, AI lip sync technology faces several challenges:

1. Accurate Phoneme Recognition

One of the biggest challenges is ensuring that AI can correctly recognize and map phonemes to their corresponding visemes. Accents, dialects, and speech impediments can make phoneme detection more difficult. For AI lip sync to be effective globally, it needs to accommodate a wide range of speech patterns.

2. Real-Time Processing

For applications like live video conferencing or virtual meetings, AI lip sync must work in real time. Achieving smooth, lag-free synchronization between speech and lip movements in real time requires powerful hardware and optimized software. This is a challenge, particularly when dealing with low-bandwidth connections.

3. Ethical Considerations

AI-driven technologies, particularly those that manipulate video or images, raise ethical concerns. Deepfakes, where AI is used to create hyper-realistic fake videos, can lead to issues such as misinformation or identity theft. It’s essential to ensure that AI lip sync technology is used responsibly, with safeguards to prevent misuse.

The Future of AI Lip Sync

The future of AI lip sync holds exciting possibilities. As the technology improves, we can expect:

Emotionally Aware Lip Sync: Future AI models could not only sync speech but also adapt facial expressions based on the tone and emotion of the speaker, making characters even more expressive and engaging.

Multilingual Lip Sync: AI lip sync will become more adept at handling multiple languages, enabling perfect synchronization in foreign language dubs without the need for significant adjustments.

Real-Time, Interactive Experiences: With the rise of augmented reality (AR) and virtual reality (VR), AI lip sync will be integral in creating real-time, interactive avatars that respond naturally to user speech, enhancing digital social experiences.

Conclusion

AI lip sync is one of the most exciting advancements in digital media today, providing a more immersive and realistic experience in entertainment, gaming, virtual communication, and beyond. As this technology continues to evolve, it will help bridge the gap between audio and visual media, allowing for more natural, engaging, and lifelike interactions between humans and digital content. However, like any emerging technology, it will require careful attention to ensure it is used ethically and responsibly. The future of AI lip sync looks promising, and it will likely become an essential tool in shaping the digital experiences of tomorrow.